PSA

Blog posts will resume when Ukraine wins.

Blog posts will resume when Ukraine wins.

About BeckerCAD

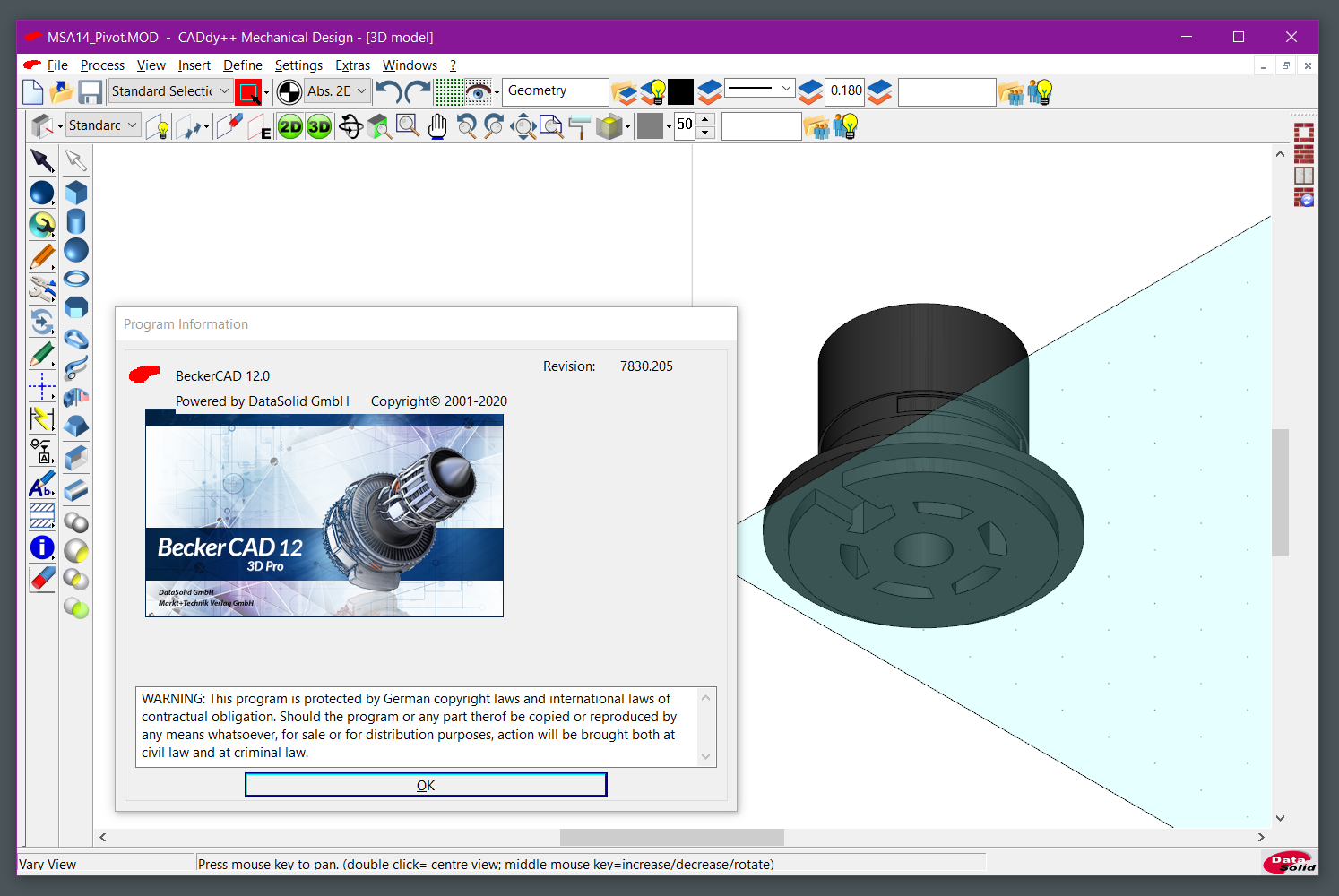

A month after I started playing with BeckerCAD, I think I understand why it was released and why one may be interested in using this tool instead of, say, IntelliCAD-based solutions, ViaCAD, or CorelCAD.

My take on this piece of software is mixed. On one hand, it is clearly aiding you with design on a computer with support for DWG drawing and ACIS SAT solids. On the other hand, they packed this powerful functionality into an application using outdated design and deployment practices, a proprietary file format, and documentation that works against you.

TL;DR: I think this application is okay for basic sketches and some modeling of small 3-D printable things. Building anything other than these will be a painful experience. If you are wondering if you should buy it, I would heavily lean "no".

Update (2021-01-03): I've written more on BeckerCAD, and why you probably shouldn't buy it. Go read the sequel.

Occasionally I run into an interesting piece of software while doing unrelated things, and this time I stumbled upon a Windows-only “BeckerCAD 12 3D Pro” application on sale in SoftMaker store, a company developing the blazing fast Linux/Windows/Mac OS X SoftMaker Office suite, and a free FreeOffice version of one.

I like Google Docs as much as the next guy, but sometimes I enjoy looking at what else is out there. So I bought a license to the office suite that I’ve had my eyes on for some time, and put a $20 BeckerCAD12 3D Pro in the cart along for the ride.

For a long time my last post here was about my laptop, and even though I have changed jobs, locations, and interests, I did not find anything worth sharing.

Now, having re-published a few posts I wrote on Reddit to my blog, I'd like to close the loop on some of the projects I have been working on in the past.

Due to copyright attribution requirements and potential conflict of interest, I stopped contributing to MediaFire Python Open SDK and the duplicity backend. This resulted in the MediaFire backend removal from duplicity.

I no longer have access to the original MediaFire/mediafire-python-open-sdk repository.

I was approached by a user of MediaFire to add another maintainer, so szlaci83 is now a co-maintainer of Python MediaFire client library.

Throughout 2020 I set a target of publishing at least 1 music piece, and the result is https://soundcloud.com/roman-yepishev/sets/album-2020.

For some time in 2019 I have been tinkering with the first generation version of WD MyCloud which ran on Comcerto2000 platform (AKA FreeScale/NXP QorIQ LS1024A), and had some success with booting the 3.19 kernel, described in my WD Community post).

While I got most of the board working, the networking performance was awful, and after a few weeks I gave up.

Almost no involvement with the project since we left Michigan.

I disabled the commenting system provided by disqus, and feel free to write rye@ an e-mail.

Cheers.

This post originally appeared on Reddit.

Both Gen 1 2014-2015 and Gen 2 2016+ Chevy Spark models have a variant of Jatco JF015E.

Originally 2014 Sparks came with transmission software that was always shifting to the low gear, causing shocks, resulting in premature belt and pulley metal wear. An update was issued for CVT firmware to prefer staying in high gear on deceleration (PI1309). 2015+ Sparks are coming with this update preinstalled. This is now a normal behavior and can't be changed.

CVTs on all 2014+ Spark models are not sealed. They are serviceable and you should perform the maintenance at least every 45K miles unless you drive on the highway all the time.

CVT performance is heavily influenced by the state of the fluid inside. Deteriorated fluid will cause the belt to slip producing shudder. No, this shudder won’t disappear on its own, it will only get worse.

Shudder is different from slow smooth acceleration, which is a feature of this transmission.

Fluid is not the only thing that can go wrong, so contact your dealer if you have transmission concerns. If you are driving a 2014 model and did not get the CVT TCM update at the dealer, the chances of transmission damage increase, so there is a campaign for 2014-2015 model year vehicles to replace damaged transmissions.

This post originally appeared on Reddit.

I've been meaning to look into the performance of MY2016 spark for the past two years, so I finally sat down, went through the documents and used a wonderful site https://www.carspecs.us/calculator/0-60 to finally come to the conclusion.

Before we start, note that I am in no way a car enthusiast, so if you see glaring omissions or errors, please comment, and again this is specific to MY2016+, earlier model years have different engines and, probably, transmissions.

Anyway, the conclusion is:

Your Chevrolet Spark is slow because it has a relatively low power engine and it weighs a lot (for good reasons).

This post was originally published on Reddit.

The following is based on official service documentation, personal experience and research driven by desire to know every little bit about this first car of mine. Sharing this because it may be interesting if this is your first car, but it may explain things in general. This post is specific to US MY2016+ models.

TL;DR: Air in the car generally:

Now, details.

This post originally appeared on Reddit.

Just a heads up that if you bought your 2016 Chevrolet Spark before May 2017, then the LG BYOM2 infotainment system can be updated by your dealer to the 05.02.2017 build to:

BYOM2 - Bring Your Own Media v2 system was running on Sparks from 2016 to 2018, then replaced for model year 2019 by INFO3 system running a version of Android.

This post originally appeared as a Github Gist.

Since I was unable to find a specific answer to how can one create a Dell recovery disk from within Linux, I decided to write the steps here.

If you write the CD image directly to the USB drive (or create a new partition and write it there), the laptop will not boot. You need your USB media to be in FAT32 format with the contents of the recovery ISO.

Format the drive and create a filesystem where $USB_DEVICE is your USB drive (check with fdisk -l, it may be

something like /dev/sdb):

# parted $USB_DEVICE (parted) mklabel msdos (parted) mkpart primary fat32 0% 100% (parted) quit # mkfs.vfat -n DellRestore ${USB_DEVICE}1

Copy the contents of the ISO file to the new FAT32 partition:

# mkdir /mnt/{source,target} # mount -o loop,ro ~/Downloads/2DF4FA00_W10x64ROW_pro\(DL\)_v3.iso /mnt/source # mount ${USB_DEVICE}1 /mnt/target # rsync -r /mnt/source/. /mnt/target/ # umount /mnt/source # umount /mnt/target

Reboot and start your machine from the USB drive.

This should have been obvious, but I've spent a few hours testing various ways to boot from that ISO (unetbootin, writing the image directlry to drive, writing to first partition), so I hope it is going to be useful to somebody else as well.

Current status: Laptop is fully operational.

Display flickering, complete freeze when moved, gap between LCD panel and top cover, grinding fan noise.

Laptop 1: Display flickering. MB, LCD Panel, IO Cables replaced, chassis issues, audio and sensors broke, exchanged.

Laptop 2: Display flickering, completely locks up if moved. LCD Panel replaced, MB replaced, locks up on any key pressure (when Intel drivers are running), won't return to working state on reboot until powercycled enough times. Disassembled the laptop and put electrical tape over the "bumps" under the motherboard. Fan and heatsink replaced, attempted to replace LCD panel again due to brightness gradient, the "new" panel had all bottom clips broken.