OpenStack Swift and Keystone. Setting up Cloud Storage

Having played a lot with HP Cloud and Canonical OpenStack installation, I decided to install OpenStack on my own home server. In order to gain better understanding about all the processes involved I decided not to use devstack script and follow the installation guides with the packages already available in Ubuntu Server 12.04 LTS (Precise Pangolin).

Surprisingly, setting up Keystone, Nova Compute, and Glance went really well with almost no effort from my side and I was even able to launch an instance of Ubuntu Server 12.04 running in nested KVM, which in turn was running on Compute virtual instance in KVM. So I had 2 levels of virtualization and it was still working quite well.

Then I decided to make Swift (Object Store) authenticating against Keystone (instead of built-in swauth). And this took a bit longer than expected.

I was using the following documents to prepare the installation:

This post assumes you are setting up a local private installation of OpenStack without HTTPS, which is fine when it is on a private LAN, but is a very bad decision for a public network. The passwords are traveling in clear-text. And by clear text I mean this:

POST /v2.0/tokens HTTP/1.1 Host: compute.example.com:35357 Content-Length: 132 content-type: application/json accept-encoding: gzip, deflate accept: application/json user-agent: python-novaclient {"auth": {"tenantName": "you@example.com-tenant", "passwordCredentials": {"username": "you@example.com", "password": "testing123"}}}

The instructions at hastexo state they are missing the keystone integration with swift. So when it says you need to use a templated catalog, that catalog by default does not contain the swift endpoint. Here’s what you will need to append to the default:

catalog.RegionOne.object-store.publicURL = http://proxy-server.example.com:8080/v1.0/AUTH_$(tenant_id)s catalog.RegionOne.object-store.name = Swift Service

Q: Where is “object-store” service defined?

A: Keystone does not actually care about the names and URLs of these endpoints, so the services themselves use the information. For example, swift application queries the endpoints and searches for object-store type, then directs all storage requests to the corresponding publicURL. All other service types are listed in default_catalog.templates.

Q: What is tenant_id?

A: Keystone knows several variables it can dynamically substitute with values based on the client – tenant_id and user_id. These are defined in TemplatedCatalog.

If you set up cert_key and key_file as the multinode howto says, your proxy-server will expect HTTPS (i.e. HTTP over SSL) connection. So adjust the schema accordingly.

AUTH_ prefix

By default Keystone’s swift_auth middleware is using reseller_prefix = AUTH_.

So if you set up tenant with ID abc and set publicURL without AUTH_ prefix, you

will get a very unhelpful message in syslog of proxy-server:

The real-world log line will look like this:

proxy-server tenant mismatch: ⤶ 9830818c942e40bbabaad02454524597 != 9830818c942e40bbabaad02454524597 ⤶ (txn: txfd89a4d692394b3589c7d31c9f78ebb2) (client_ip: 192.168.1.110)

The message does not show the real compared value and, in fact, should print “abc != AUTH_abc”. You have two options:

If you are setting up a single authentication server and the backend nodes are going to serve only one set of accounts, then you can unset

AUTH_prefix in middleware configuration on proxy-server and in endpoints catalog.If you have a massive deployment and it is possible to have resellers for the storage you provide, you set up a different

reseller_prefixon each proxy-server that is going to serve a different reseller and update keystone catalog and middleware configuration on proxy-server.

So, to simplify our deployment, we are dropping AUTH_ prefix completely. Update

the keystone service template:

Update the reseller_prefix in proxy-server.conf on proxy-server itself

(you don’t need to change anything on storage nodes):

[filter:keystone] paste.filter_factory = keystone.middleware.swift_auth:filter_factory reseller_prefix = operator_roles = admin, swiftoperator

I will get to operator_roles setting later. [filter:keystone] is then referenced in [pipeline:main] pipeline value and it can be called swiftauth or similar:

Proxy Server

Important: By default proxy-server will try to connect to keystone using HTTPS. Earlier versions would hang indefinitely but since the fix in 891687 the response from swift will be:

If you are following the tutorials, keystone will not listen for HTTPS so you

will need to modify proxy-server.conf:

authtoken is also referenced from [pipeline:main] pipeline and it should be

before the filter specified by keystone.middleware.auth_token in

filter_factory. You can see all the options in the Middleware architecture

document.

You will also want to add delay_auth_decision = 1 to authtoken section if you want to allow anonymous user access (such as public container) – see The Auth System document.

Zones

This is not actually about keystone but it’s nice to know.

First of all, if you feel lost about the concept of a Ring and OpenStack: The Rings is not helping, you should definitely read this analysis by Julien Danjou – you will find out slightly more than you need for the simple setup, the article tells you about the bottlenecks too, which you must know should you start a large-scale deployment.

So, a zone can be basically a disk, a server, a rack, or anything that groups the storage devices. If you have two servers and each holds a storage zone then PSU failure of one server will not affect the other zone.

For initial testing purposes you can actually start with a single zone, just set the number of replicas for the ring to be 1:

swift-ring-builder account.builder create 18 1 1 swift-ring-builder container.builder create 18 1 1 swift-ring-builder object.builder create 18 1 1 # partition size --------^ ^ # number of replicas ----'

Please note that if you want to change the number of replicas later, you will have to re-create the rings from scratch – 958144.

Tenants and Roles

Remember operator_roles in [filter:keystone] earlier? Only the users granted

those roles will be able to access the storage server. If the user does not

have the role listed there, then all the requests will fail with 401 –

Unauthorized.

Tenants (earlier called projects) allow grouping the users. In order to use swift you will need to create a role, a tenant, a user and assign the role to the user within the tenant:

You can also use admin user and role set up in the tutorials above, but creating these users is quite straightforward.

$ keystone role-create --name swiftoperator +----------+----------------------------------+ | Property | Value | +----------+----------------------------------+ | id | e77542b0b6884ea4ada3ec9eb6b75c6f | | name | swiftoperator | +----------+----------------------------------+ $ keystone user-create --name you@example.com --pass testing123 +----------+-----------------------------------------+ | Property | Value | +----------+-----------------------------------------+ | email | None | | enabled | True | | id | 866be627384044a49b3a172a1cbef74f | | name | you@example.com | | password | $6$rounds=40000$Bl/EL3YgSUqIpSr/$Lc... | | tenantId | None | +----------+-----------------------------------------+ $ keystone tenant-create --name "you@example.com-tenant" +-------------+----------------------------------+ | Property | Value | +-------------+----------------------------------+ | description | None | | enabled | True | | id | aaee4b5b277c40aaad201742db9e20c3 | | name | you@example.com-tenant | +-------------+----------------------------------+ $ keystone user-role-add --user 866be627384044a49b3a172a1cbef74f --role e77542b0b6884ea4ada3ec9eb6b75c6f --tenant_id aaee4b5b277c40aaad201742db9e20c3y

The names of the user and tenant can be arbitrary. For example, hpcloud uses your-email@example.com-default-tenant for tenant name and your email as username.

You will also need to create service tenant to be used by swift when checking

your user credentials. This is done in hastexo keystone setup script. Look for

keystone tenant-create --name "$SERVICE_TENANT_NAME". This information should

be added to the [filter:authtoken] in proxy-server.conf:

[filter:authtoken] paste.filter_factory = keystone.middleware.auth_token:filter_factory auth_protocol = http # - use http instead of https auth_host = 127.0.0.1 # - keystone host auth_port = 35357 # - keystone port admin_tenant_name = ${tenant name for service users, e.g. "service"} admin_user = ${admin user created in keystone, e.g. swift} admin_password = ${password set for "swift" user when created} admin_token = ${admin_token from keystone.conf}

For Folsom release you will need to set signing_dir in authtoken filter to

/var/cache/swift/keystone-signing, otherwise swift-proxy-server will fail to

start with upstart, but will complain about $HOME/keystone-signing when started

manually.

Great, now we check whether the user can get auth token:

$ nova --os_username you@example.com --os_password testing123 --os_tenant_name=you@example.com-tenant --os_auth_url http://compute.example.com:35357/v2.0 credentials +------------------+---------------------------------------------------------------------------+ | User Credentials | Value | +------------------+---------------------------------------------------------------------------+ | id | 866be627384044a49b3a172a1cbef74f | | name | you@example.com | | roles | [{u'id': u'e77542b0b6884ea4ada3ec9eb6b75c6f', u'name': u'swiftoperator'}] | | roles_links | [] | | username | you@example.com | +------------------+---------------------------------------------------------------------------+ ...

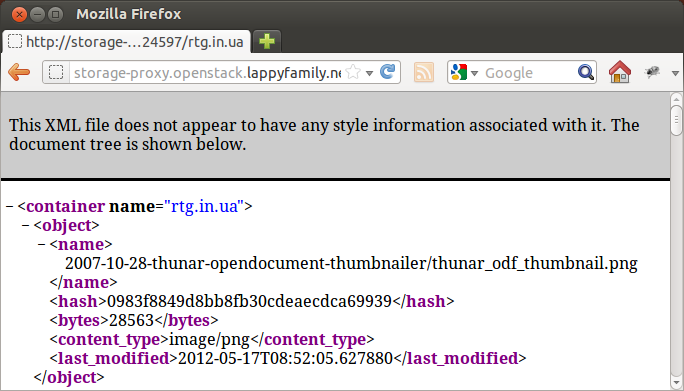

And finally, we use swift cli:

$ swift -U you@example.com-tenant:you@example.com -K testing123 -A http://compute.openstack.lappyfamily.net:35357/v2.0 -V 2.0 stat Account: aaee4b5b277c40aaad201742db9e20c3 Containers: 0 Objects: 0 Bytes: 0 Accept-Ranges: bytes

Congratulations, the Swift/Keystone pairing is set up properly.

Finally, there is a bug in python-webob that causes Swift HEAD request to return the following response:

This is 920197 and can be fixed by the patch attached to the bug report until I or somebody else forwards the patch to Debian maintainer (the fix is already in the trunk of webob thanks to quick Martin Vidner response).

S3 Emulation and Keystone

If you follow the instructions at Configuring Swift with S3 emulation to use Keystone, proxy-server will not actually be able to talk to Keystone because the /s3tokens URL is not being handled in the default configuration.

I found the solution in a message by Thanathip Limna at 956562 –

[filter:s3_extension] config needs to be added along with adding s3_extension

to pipelines of public_api and admin_api right after ec2_extension:

[filter:s3_extension] paste.filter_factory = keystone.contrib.s3:S3Extension.factory [pipeline:public_api] pipeline = token_auth admin_token_auth xml_body json_body debug ec2_extension s3_extension public_service [pipeline:admin_api] pipeline = token_auth admin_token_auth xml_body json_body debug ec2_extension s3_extension crud_extension admin_service

If you don’t add this, any requests to Swift with S3 credentials are failing with Internal Server Error:

HTTP/1.1 500 Internal Server Error Content-Type: text/plain Content-Length: 1119 Date: Sun, 20 May 2012 08:31:12 GMT Connection: close Traceback (most recent call last): File "/usr/lib/python2.7/dist-packages/eventlet/wsgi.py", line 336, in handle_one_response result = self.application(self.environ, start_response) File "/usr/lib/python2.7/dist-packages/swift/common/middleware/healthcheck.py", line 38, in __call__ return self.app(env, start_response) File "/usr/lib/python2.7/dist-packages/swift/common/middleware/memcache.py", line 47, in __call__ return self.app(env, start_response) File "/usr/lib/python2.7/dist-packages/swift/common/middleware/swift3.py", line 479, in __call__ res = getattr(controller, req.method)(env, start_response) File "/usr/lib/python2.7/dist-packages/swift/common/middleware/swift3.py", line 181, in GET body_iter = self.app(env, self.do_start_response) File "/usr/lib/python2.7/dist-packages/keystone/middleware/s3_token.py", line 157, in __call__ (resp, output) = self._json_request(creds_json) File "/usr/lib/python2.7/dist-packages/keystone/middleware/s3_token.py", line 91, in _json_request response.reason)) ServiceError: Keystone reply error: status=404 reason=Not Found

If you have modified the reseller_prefix in swift_auth middleware of proxy-server, you will need to change it in [filter:s3token] too, otherwise you will get this response from S3 emulation layer

<?xml version="1.0" encoding="UTF-8"?> <Error> <Code>InvalidURI</Code> <Message>Could not parse the specified URI</Message> </Error>

And that’s what will be in proxy-server syslog:

So now we have Swift S3 emulation working with keystone too.